Using a Negative Prompt with Stable Diffusion + 5 Examples

Ever told an AI to generate a "photorealistic portrait" and gotten something that looks like a lost SCP file instead?

That’s where a negative prompt in Stable Diffusion comes in. Instead of just telling the AI what you want, you also tell it what to avoid — like extra limbs, distorted faces, or backgrounds that look like a rejected Windows XP wallpaper.

We’ll walk you through how to use a negative prompt with AI like a pro, as well as a cool alternative for Stable diffusion where you won’t have to learn all of those pesky ins and outs.

In this article, we’ll cover:

- What is a negative prompt?

- How do negative prompts work?

- Should I always use a negative prompt when generating images?

- Step-by-step guide to using negative prompts in Stable Diffusion

- How many words should a negative prompt be?

- 5 negative prompt examples for different use cases

- Tips for making effective negative prompts

- Why are negative prompts important?

- FAQs

What is a negative prompt in Stable Diffusion?

Stable Diffusion is great at making photorealistic pics (it’s one of the best AI image generators), but it keeps taking things way too far.

You ask for a realistic portrait, and suddenly, the AI decides the person needs seven fingers, nightmare teeth, and a background straight out of a fever dream.

This is where a Stable Diffusion negative prompt comes in — it tells the AI, "Hey, maybe let’s NOT do that."

Here’s why negative prompts are it:

- Keeps AI from going full Lovecraftian horror when you just wanted a normal face: AI loves to add extra details where they’re not needed — extra limbs, asymmetrical eyes, or facial expressions that scream, "I know your secrets." Negative prompts help stop the madness.

- Prevents weird textures, blurry messes, and AI’s obsession with “dreamlike” chaos: Ever asked for a clean, high-res image and ended up with something that looks like it was painted on a potato? Negative prompts help prevent grainy, overexposed, or just plain cursed results.

- Tames AI’s creative energy so it doesn’t go off the rails: AI can be too creative for its own good. Without a negative prompt, it sometimes decides that your fantasy warrior needs half a dragon attached to their shoulder or that your scenic landscape should have floating eyeballs for no reason.

- Essential if you want polished, professional images instead of weird AI “artifacts:” Whether you’re making marketing graphics, concept art, or just trying to get an AI-generated cat that actually looks like a cat, negative prompts help remove distractions and refine the final product.

How do negative prompts work in Stable Diffusion?

Stable Diffusion interprets your prompt based on patterns from its training data and then throws something onto the canvas that may or may not be a complete disaster. While positive prompts tell the AI what to include, negative prompts tell it what to avoid, acting like a filter for unwanted chaos.

Here’s how Stable Diffusion processes negative prompts:

- It’s like training a dog, but the dog is an AI that loves adding extra fingers: When you tell Stable Diffusion what not to do, it learns to steer away from common errors like weird hands, distorted faces, or backgrounds that look like an abstract painting gone wrong.

- Negative prompts balance out the AI’s “best guesses,” so it doesn’t get weird: Stable Diffusion doesn’t actually understand images — it just predicts what should be there based on your prompt. Without a negative prompt, it might assume you want those creepy artifacts.

- CFG scale decides how much the AI listens to you — kind of like a stubborn toddler: The Classifier-Free Guidance (CFG) scale tells Stable Diffusion how strictly to follow your prompts. A higher CFG scale = the AI listens more. A lower CFG scale = the AI takes creative liberties (and not always in a good way).

- More steps mean more refinement — but also more room for AI weirdness: The number of sampling steps affects how detailed the AI makes the image. More steps usually mean better results, but without a strong negative prompt, the AI might overcomplicate things and start adding strange, unnecessary details.

- Negative and positive prompts work together like a “push and pull” system: You’re not just guiding the AI with what you want — you’re also blocking off certain paths, so it doesn’t end up in weird AI art territory. The key is finding the right balance so that the AI doesn’t get too chaotic or too restrictive.

Should I always use a negative prompt when generating images?

Short answer? Not always. Long answer? If you enjoy rolling the dice and hoping your AI-generated portrait doesn’t come out looking like it crawled out of a crypt, go ahead and skip the negative prompt. But if you want consistent, high-quality results, using one is almost always a good idea.

Here’s when you should (and shouldn’t) use a negative prompt:

- Use a negative prompt when you’re tired of AI’s “artistic” results: If Stable Diffusion keeps giving you melty faces, melted hands, or backgrounds that look like what your granddad said Grateful Dead concerts were like, a negative prompt will help rein it in a bit.

- Skip it if you want AI to go wild (and possibly unhinged): Some artists actually want a little AI weirdness. If you’re experimenting with abstract art, dreamlike aesthetics, or just letting the model surprise you, turning off negative prompts might lead to more “creative” results.

- Essential for professional work (unless you want extra limbs in your client’s product ad): If you’re using AI for marketing materials, branding, or anything that needs to look polished, negative prompts are a must. No one wants a realistic sneaker ad where the shoe also has fingers.

- Not always needed for simple objects, but still useful: If you’re generating something straightforward (like a cube or a logo), you might not need a negative prompt. But if Stable Diffusion starts adding weird textures, lighting issues, or things that shouldn’t be there, adding a negative prompt can help clean it up.

- Always useful for anything that needs the extra precision: If you’re working with human faces, character design, or high-detail scenes, negative prompts will help avoid AI nightmares like stretched eyes, duplicate limbs, or smiles that belong in the movie Smile. (The second one is pure nightmare fuel.)

Step-by-step guide to using Stable Diffusion negative prompts

Still ending up with stuff that looks half-human, half-glitch, don’t worry — negative prompts can fix that. Whether you’re using Stable Diffusion online or running it locally, using a negative prompt to clean up your images is just good thinking.

Here’s how to do it step by step:

Step 1: Access Stable Diffusion like a true AI art scientist

Before you start throwing negative prompts at the AI like a frustrated dungeon master, you actually need a way to run Stable Diffusion. Not all versions are created equal — some are relatively easy, while others require "hours of setup and a minor GPU sacrifice to the AI gods."

Mind you, all of these will require at least a login, and many charge subscriptions — or at least give you a free trial.

Here’s where to get started:

- Online versions if you just want to vibe and generate images: Websites like Hugging Face and RunDiffusion let you run Stable Diffusion without any installation. Super easy, but don’t expect god-tier customization.

- AUTOMATIC1111 if you want full control (and don’t mind fighting with Python): The go-to open-source UI for Stable Diffusion, packed with every feature you could ever need. Just be ready for a bit of setup and the occasional “Why isn’t this working?” moment.

- ComfyUI if you like nodes, graphs, and feeling like a sci-fi hacker: This one’s for power users who want modular control over every step of the AI process. Expect a learning curve but also godlike image customization.

- Stable Diffusion Lite if you don’t need the extra chaos: Some platforms strip down the options and just let you type prompts and generate images. You get no advanced settings, but there are also fewer headaches.

Step 2: Enter a positive prompt that actually makes sense

Before you even think about touching a negative prompt, your positive prompt needs to not suck.

If you type in “a guy” and expect Stable Diffusion to generate a photorealistic stunner, you’re setting yourself up for disappointment. AI is powerful, but it’s not a mind reader — you need to guide it with details, structure, and the right words. Otherwise, you’re rolling the dice on whether you get a stunning portrait or some blobfish-looking abomination with six chins.

Here’s how to write a positive prompt that doesn’t read like chaos magick:

- Be specific because AI is a clueless intern with unlimited energy: If you just say “a cool character,” the AI might give you a stick figure, a wizard, or a refrigerator with sunglasses. Instead, go for “a Dark Souls armor-clad mercenary with glowing blue eyes, cinematic lighting, ultra-realistic, 4K,” so the AI actually knows what you want.

- Use descriptive words that tell AI the vibe, not just the object: Asking for “a castle” is a rookie move. Do you mean a dark, haunted medieval fortress? A sparkling Disney castle? A brutalist dystopian nightmare? Give AI a style to work with so it doesn’t just make something at random.

- Tell it what medium you want, unless you like surprises: If you want a hyperrealistic image, you need to say so. If you want anime, digital painting, oil painting, or a 3D render, tell it directly — otherwise, Stable Diffusion might just mix everything together and give you something cursed.

- Don’t keyword spam like you’re summoning a demon: Some people think that adding every art-related keyword ever will improve the output. No, it just confuses the AI into generating a Frankenstein image where nothing makes sense. Keep it clear, concise, and structured.

- Consider the AI’s feelings. (Just kidding, but seriously, structure matters): The way you order words in a prompt actually changes the results. “A realistic knight in golden armor, intricate details” will give you something different from “intricate details, golden armor, a realistic knight.” Play around with word order to see what works best.

Step 3: Find the negative prompt box and tell AI what not to do

Now that you’ve given Stable Diffusion clear instructions on what to generate, it’s time to tell it what to avoid. AI can be a little too creative sometimes, throwing in extra limbs, melted textures, and other artifacts that belong in a horror movie. Negative prompts are your way of saying “please, for the love of god, no” to all of that.

Here’s how to enter a negative prompt like a pro:

- Locate the negative prompt box before AI commits war crimes: Most interfaces, like AUTOMATIC1111 and ComfyUI, have a separate field labeled Negative Prompt. If you’re using a stripped-down version of Stable Diffusion, it might be hidden under Advanced Settings.

- Think of it as a ban list for bad AI habits: Mostly, it’s about the stuff you don’t want appearing in your image, the things that you want to avoid like that one dodgeball that got you in front of your whole gym class.

- Start simple, then refine based on results: Instead of dumping an entire paragraph of negative keywords, start with basic ones like blurry, distorted, low quality, extra fingers, and deformed face. If the AI still screws up, tweak the list and get more specific.

- Check community-tested negative prompts if you’re feeling lazy: Some users have already done the work of testing which keywords actually improve AI generations. Check Reddit, Discord, or AI art forums for ready-made lists.

- Balance negative prompts with positive ones so AI doesn’t overcorrect: If you go too hard with negatives, the AI might strip out important details and make everything look unnaturally smooth. The goal is to refine, not to just delete for the lulz.

Step 4: Fine-tune the output because AI doesn’t always listen the first time

Stable Diffusion — like all AI — always finds a way to mess up regardless of what you tell it to do. Even with a solid positive and negative prompt, the AI might misinterpret details, overcorrect, or straight-up ignore you.

That’s why fine-tuning settings is essential — you’re basically teaching the AI to behave.

Here’s how to get the best results:

- Tweak the CFG scale so that AI listens without becoming a control freak: The Classifier-Free Guidance (CFG) scale controls how much the AI follows your prompts. Too low (e.g., 4–7), and it might ignore some of your instructions. Too high (e.g., 15+), and it can overcorrect, leading to unnatural-looking images.

- Adjust sampling steps to avoid the “rushed sketch” look: More steps usually mean better image quality, but going too high wastes time without much improvement. Around 30–50 steps is the sweet spot for most models.

- Try different models because they all have very different personalities: Not every Stable Diffusion model treats prompts the same way. Some are great at realism, while others turn everything into anime. If your results are off, switch models instead of assuming your prompt is broken.

- Keep testing because AI takes trial and error: Even the best prompts aren’t one-size-fits-all. If your output still looks weird, tweak wording, reorder phrases, or adjust settings until you find the perfect combination.

Step 5: Compare results and make sure AI isn’t being passive-aggressive

So you’ve got your positive prompt, a solid negative prompt, and fine-tuned settings. But how do you know if the AI is actually improving, or if it’s just gaslighting you into thinking this is “as good as it gets”? The answer: side-by-side comparisons.

Here’s how to test if your negative prompt is actually doing its job:

- Run the same prompt with and without a negative prompt: If both images look equally cursed, something’s off. A good negative prompt should noticeably remove weird textures, extra limbs, and other AI-generated nonsense.

- Check if the AI is “overcorrecting” and making things weird in a new way: Sometimes, a strong negative prompt doesn’t just fix issues — it swings too hard in the other direction. If your detailed portrait now looks unnaturally smooth and lifeless, you might need to ease up on the negative terms.

- Look at fine details (especially faces and hands): AI-generated eyes, fingers, and teeth are often the first places where things go wrong. If your negative prompt is working, you should see fewer of those creepy, melted features.

- Make small tweaks and re-run the test: If your negative prompt isn’t fixing the problem, try adjusting the wording. Swapping “distorted” for “misshapen” or “blurry” for “low resolution” can sometimes get better results.

How many words should a negative prompt in Stable Diffusion be?

If you’ve ever seen a negative prompt that looks like someone just copy-pasted the entire dictionary, you might be wondering if more words mean better results.

Spoiler: They don’t. The trick isn’t how many words you use — it’s which words actually matter.

Here’s what you need to know about negative prompt length:

- Start short, then expand if needed: A basic negative prompt like “blurry, distorted, extra fingers, low quality” will already improve most images. If problems persist, add more specific words instead of throwing in everything.

- Too many words can confuse AI instead of helping: Stable Diffusion isn’t reading your negative prompt like a human — it’s weighing words based on probability. A huge list of terms can actually dilute the effectiveness of the most important ones.

- Keep it under 20–30 words for best results: Most well-optimized negative prompts stay under a sentence or two. If you’re getting good results, adding more is just overkill.

- Test and remove words that don’t change the output: If adding “bad lighting” or “low contrast” makes no visible difference, cut them. Negative prompts work best when they’re focused on actual issues in your image.

- Test and remove words that don’t change the output: If adding “bad lighting” or “low contrast” makes no visible difference, cut them. Negative prompts do their thing best when they’re focused on actual issues in your image.

- Some models ignore extra-long negative prompts anyway: Depending on the model, overloading your negative prompt can just cause it to be — well, to put it politely, partly brushed off. Like a puppy, the AI will prioritize the most important words, so don’t waste space.

5 negative prompt examples for different use cases

Negative prompts aren’t all the same.

The best one depends on what kind of image you’re making — portraits, landscapes, fantasy art, product mockups, and more. A good negative prompt removes distractions without messing up the details you actually want.

Here are some solid negative prompts for different types of AI-generated images:

1. General image cleanup: Stop AI from making your art look like a 144p YouTube video

Ever tried generating a high-quality image, only for it to come out looking like it was filmed on a potato from the early 2000s?

Stable Diffusion has a bad habit of forgetting what sharpness is, giving you images that look like they were recovered from a lost Bloodborne cutscene. If you don’t want your AI-generated art to resemble a glitched-out PlayStation game, a cleanup-focused negative prompt is your best bet.

Negative prompt: blurry, low-resolution, pixelated, grainy, overexposed, washed out, bad contrast

What it does: Forces AI to prioritize sharpness, proper lighting, and clear textures instead of producing low-detail, overexposed, or fuzzy messes. This keeps images looking crisp, well-balanced, and usable.

- Before: Images look muddy and unfocused, with soft edges and weird lighting issues. Textures appear stretched or smudged, and details get lost in AI’s attempt to “soften” everything.

- After: Images are sharper, more detailed, and actually resemble HD artwork. No more blurry faces, washed-out colors, or mysterious fog covering half the scene.

2. Portraits that don’t look like Gaspar Noe would love ‘em

One moment, Stable Diffusion is blessing you with a perfect model; the next, your character has two noses and a soul-piercing stare that belongs in an exorcism movie.

If your AI-generated people keep looking like rejects from a Junji Ito horror manga, this negative prompt setup will help.

Negative prompt: distorted face, asymmetrical eyes, warped features, extra fingers, deformed hands, weird teeth, unsettling expression

What it does: Prevents AI from generating faces that look like they’ve been through a meat grinder, keeping portraits natural and anatomically correct. Also stops AI from giving your characters “smiles” that belong in Silent Hill 2.

- Before: AI-generated faces often have uneven eyes, stretched lips, or teeth that look copy-pasted from several different people. Hands? Don’t even ask.

- After: Faces appear more proportional, with eyes that actually align and smiles that don’t make you question reality. Hands stop looking like Eldritch appendages, and characters actually resemble humans instead of AI-generated cryptids.

3. Landscape images: Stop AI from giving you “alien planet” vibes when you just wanted a forest

You asked for a peaceful countryside scene, and Stable Diffusion decided you meant an alternate dimension where the grass is purple and the mountains are floating.

AI has a weird habit of turning normal landscapes into something straight out of a sci-fi movie. If you’re tired of generating forests that look like they belong in Avatar (but worse), it’s time to rein the AI in.

Negative prompt: overexposed sky, grainy textures, unnatural colors, weird lighting, random floating objects

What it does: Keeps AI-generated landscapes realistic and natural, avoiding surreal lighting effects, neon-colored vegetation, and unnecessary floating rocks that AI swears belong there.

- Before: Landscapes are oversaturated, with glowing blue rivers, radioactive-looking plants, and a sky that looks like it’s on fire. Sometimes, AI throws in random floating objects for no reason.

- After: The image has more balanced colors, natural lighting, and realistic textures. Forests actually look like places you could walk through, not a backdrop for an intergalactic war.

4. Fantasy art: Stop AI from throwing every effect imaginable onto the canvas

You wanted a cool fantasy scene — maybe a warrior standing on a cliff, looking over a mystical kingdom.

Instead, Stable Diffusion gave you a glowing, oversaturated mess with a background that looks like a crayon explosion.

AI loves to crank up the chaos in fantasy art, throwing in way too many colors, unnecessary light effects, and backgrounds so cluttered they look like a JoJo’s Bizarre Adventure fight scene.

Negative prompt: oversaturated, poorly detailed, cluttered background, unnatural anatomy, bad proportions

What it does: Keeps fantasy art clean, detailed, and immersive instead of looking like AI just dumped every visual effect possible onto the canvas. Helps maintain balanced colors, proper character proportions, and a background that doesn’t feel like a fever dream.

- Before: Characters have weird proportions, glowing body parts for no reason, and a background that looks like five different fantasy realms smashed together. Colors are so bright they could burn retinas.

- After: The scene looks cohesive, detailed, and actually artistic instead of AI-generated chaos. No more rainbow-colored armor, floating rocks that don’t belong, or faces that look like they belong to five different species at once.

5. Product design / mockups: Stop AI from making your “clean design” look like a mess

AI-generated product mockups should look sleek and professional. But Stable Diffusion has other ideas. Instead of a crisp, high-quality design, you get pixelated edges, weird reflections, and bad lighting that makes it look like your product was photographed in a haunted house.

If you don’t want your AI-generated mockup to look like a bootleg product listing from Wish, you need a strong negative prompt.

Negative prompt: low resolution, unwanted reflections, pixelated edges, bad lighting, unrealistic textures

What it does: Removes messy textures, lighting inconsistencies, and resolution issues so your product designs actually look like something a real company would use in a marketing campaign and not in a found footage movie.

- Before: Product mockups have blurry edges, random light glares, and textures that make everything look slightly melted. Some designs even end up looking distorted, like AI tried to add extra dimensions where none exist.

- After: Images are clean, sharp, and well-lit. No more strange reflections, weird material textures, or AI deciding your product should have extra random details.

Extra tips for making effective negative prompts

Negative prompts aren’t “Please, AI, don’t do this” — they’re “AI, If you mess this up, I will personally carpet-bomb your GPU farm.”

Stable Diffusion is powerful, but it also has zero common sense. If you don’t give it clear instructions on what not to do, it’ll just assume you’re fine with six-fingered goblins, cursed-textures, and product designs that look like they belong on Five Nights and Freddy’s.

Here’s how to stop AI from taking creative liberties that no one asked for:

- Start with AI’s most cursed mistakes: Use words like “blurry, distorted, low resolution, extra limbs” — because AI doesn’t just make “small” mistakes. It goes full horror show if left unchecked. Cut the worst offenders first.

- Be specific because AI takes things literally: If you just say, “bad anatomy,” AI might decide one extra eyeball is still within acceptable limits. Instead, say “extra fingers, asymmetrical eyes, unnatural proportions,” so it knows exactly what to avoid.

- Don’t just stack 500 words into the negative prompt: There’s a point where adding more negatives doesn’t actually help. Instead of “bad, bad, really bad, ultra bad, not good, terrible,” just use the most relevant terms and let AI do its thing.

- Experiment with the CFG scale instead of panicking: If AI keeps ignoring your negatives, increasing the Classifier-Free Guidance (CFG) scale can force it to actually listen. Just don’t go too high, or it might start overcorrecting and making everything look like it was sculpted from Play-Doh.

- Test different models because some are better listeners than others: Stable Diffusion models have different personalities (yes, really). If one model keeps giving you fever dream nonsense, try another one before assuming your prompt is broken. Of course, you might have to end up paying extra for using those better models (which typically are GPU hogs). That’s life.

- Save and reuse your best negative prompts instead of re-learning this lesson every time: If you finally find a setup that works, write it down. There’s no reason to rediscover fire every time you generate an image. Over time, you’ll develop your very own prompt library — think of it as your Book of Spells.

Why are negative prompts important for AI art?

AI is basically a golden retriever — excited, full of energy, and absolutely terrible at taking hints. If you don’t tell it exactly what not to do, it will happily give your realistic portrait three arms or turn your product design into a Picasso via DMT. Negative prompts exist so you can stop AI from being "helpful" in all the wrong ways.

Here’s why negative prompts are a lifesaver:

- They save you from spending half your life fixing AI’s mistakes: Without negative prompts, you’ll spend more time in Photoshop than actually generating images. Who wants to manually erase five extra fingers from every hand?

- They help AI focus on the good details instead of throwing in random junk: When AI isn’t wasting processing power on blurry, distorted, low-resolution nonsense, it can actually put effort into generating what you do want.

- They make AI art actually usable instead of just “fun experiments”: If you want AI-generated images for marketing, concept art, or professional work, you can’t afford to have every output look like it was generated by Joe Rogan in the realm of the machine elves.

- They let you fine-tune results instead of playing AI roulette: Negative prompts give you control. Without them, you’re basically telling AI, “Just do whatever, I guess,” and hoping for the best.

FAQs

Can I use multiple negative prompts with Stable Diffusion simultaneously?

Yes, and honestly, you should. AI has the memory of a goldfish and the impulse control of a toddler on espresso. If you don’t tell it exactly what not to do, it’ll just keep throwing extra limbs and distorted faces into the mix. Stacking multiple negative prompts helps keep things in check.

Just don’t go overboard — too many negatives can make AI panic and start removing things it actually needs, like depth, detail, or the will to generate anything at all.

Can I use negative prompts with any Stable Diffusion model?

Mostly, yeah — negative prompts work across different models, but each model has its own way of interpreting them. Some models will follow instructions like a well-trained dog, while others will ignore half of what you say and give you something straight out of a fever dream.

If your negative prompt isn’t working, try trimming it down, swapping out words, or switching to a model that actually listens.

Do negative prompts always work with Stable Diffusion?

Not always — sometimes AI just decides it knows better, and it’s wrong. Some models will ignore certain negatives, while others might overcorrect and leave you with lifeless, overly smooth images that look like they were made in a mobile app from 2013. If your negatives aren’t doing their job, adjusting the CFG scale, sampling steps, or switching to a different model might give you better results than just yelling, "No more creepy smiles!" into the prompt box.

Are there limitations to using negative prompts with Stable Diffusion?

Absolutely — because AI, despite its god-like ability to create images out of thin air, still has the reasoning skills of a Roomba stuck in a corner. Negative prompts aren’t magical delete buttons. Instead of actually fixing a problem, AI sometimes just hides it in a way that technically counts as “fixed.”

Told it to stop adding extra fingers? Great, now it’s hiding the hand behind a conveniently placed object instead. Told it to stop making things blurry? Fantastic, now your image is sharp in all the wrong places and looks like it was shot with a 2001 digital camera.

How does the CFG scale affect negative prompts with Stable Diffusion?

The Classifier-Free Guidance (CFG) scale is basically an obedience meter for AI — except instead of training a dog, you’re trying to convince an overenthusiastic intern that no, we do not need more Comic Sans.

Here’s what the CFG scale numbers mean:

- Low CFG (4–7): AI treats your request as a suggestion. It’ll generate whatever it feels like, with only a vague attempt at listening to your input. Perfect if you like surprises—or if you want to see what happens when AI decides your knight in shining armor should have two heads for some reason.

- Medium CFG (8–12): AI listens to your prompt while still keeping some creative freedom. This is the sweet spot for most users — enough control to get what you want without making AI overthink itself into a lifeless, plastic-looking mess.

- High CFG (13–15+): AI follows your prompt exactly — which sounds great until you realize it’s so focused on doing what you said that it forgets to make things look natural. Faces start looking weirdly stiff, textures lose their depth, and everything kind of looks like an AI-generated stock photo.

How do I find the right negative prompt for my project?

Trial and error—because AI follows instructions about as well as a toddler in a candy store. Start with the worst offenders first (blurry, distorted, extra fingers, bad anatomy) and refine from there. If your images still look weird, start getting more specific. Seeing creepy smiles? Add “unsettling expression.” Getting faces that look like they’re melting? Try “warped features, bad proportions.”

Where do I find great negative prompts?

If you’re tired of playing 4D chess with AI’s weird logic, just steal from the internet. There are entire communities on Reddit and Discord that have already tested what works, so why suffer when you can just copy their homework? AI is cheating its way through life—might as well return the favor.

Can I use negative prompts in combination with style prompts?

Yes, and you should — think of it like ordering a custom burger — the positive prompt is telling AI what ingredients you want (juicy patty, crispy bacon, melted cheese), while the negative prompt is making sure AI doesn’t throw in random stuff you never asked for (pickles, mayo, an extra six fingers on the hand holding the burger). Using both together helps refine the style while steering clear of AI’s worst habits.

Why do some negative prompts seem to have no effect?

Because AI is like a stubborn toddler — sometimes, it just ignores you. There are a few reasons this happens:

- The negative prompt isn’t strong enough — try rewording or being more specific.

- The CFG scale is too low, so AI isn’t paying enough attention to your prompt.

- The model you’re using doesn’t react much to negative prompts — some are just built differently.

- You’re overloading AI with too many instructions, and it’s deciding which ones to prioritize (usually not the ones you want).

Just make image generation easier by using Weights

Stable Diffusion is one of the most powerful AI image generators out there — but let’s not pretend it’s easy to use.

Let’s recap:

- If you want to run it locally: You’re looking at a full-on setup process that involves downloading Python, Git, and a model that can eat up 4–10GB of storage before you even generate a single image.

- Even if you go for a hosted version: You’re still paying for every generation (or locked into subscription plans), dealing with credit limits, or hoping the free version of Stable Diffusion doesn’t crash mid-prompt.

- And let’s talk interfaces: Stable Diffusion ain’t exactly beginner-friendly. You’re navigating command lines, adjusting settings like CFG scales and sampling steps, and figuring out how to write prompts that don’t result in mutant-looking people or landscapes that would make Team Silent blush.

If you’re into tweaking every detail and experimenting with AI models, it’s great. But if you just want to generate AI art without having to learn the AI equivalent of dark magic, you might be looking for something easier.

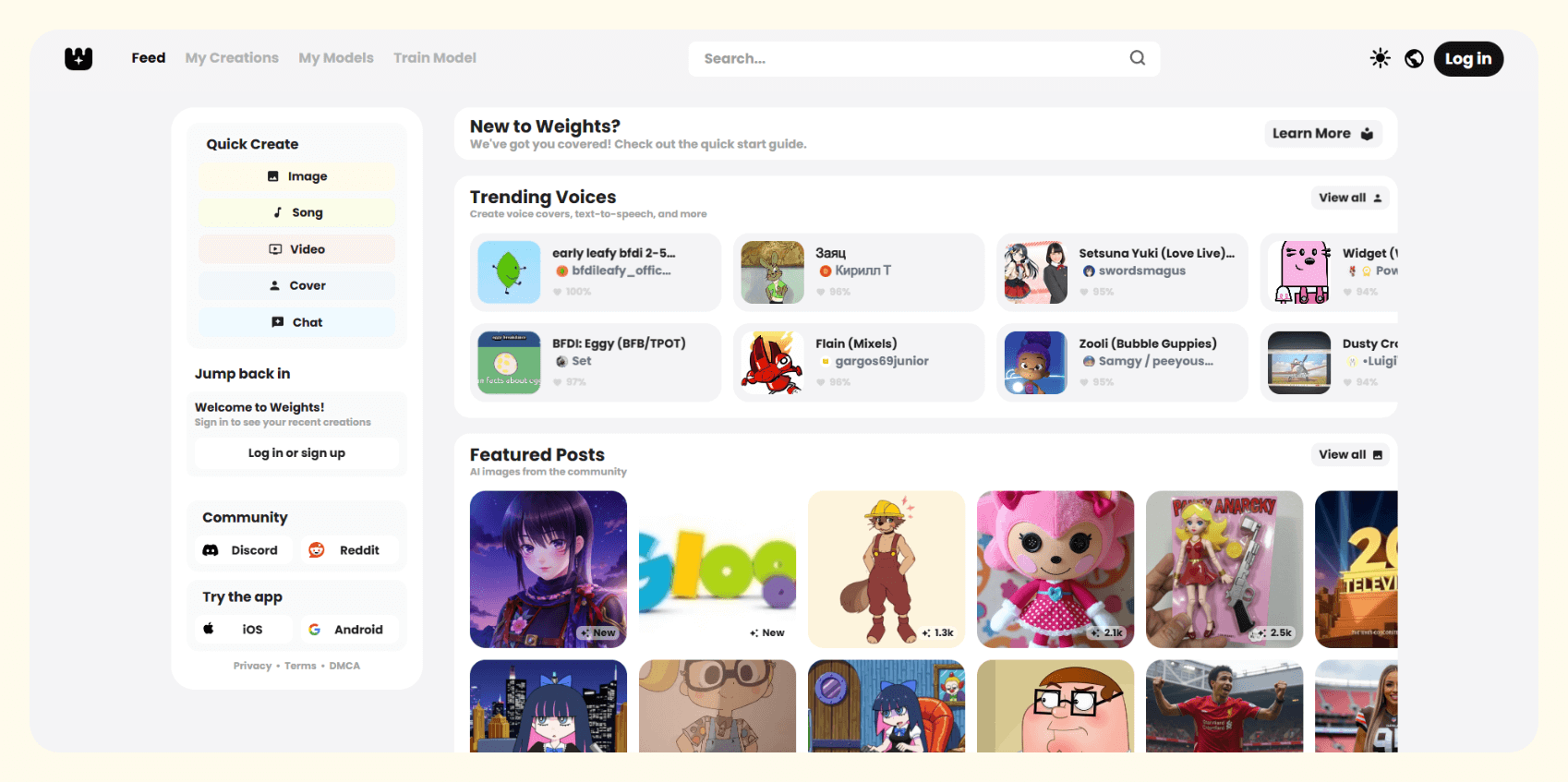

That’s where the website Weights.com comes in:

- Free forever: No weird trials, no sneaky credit systems — just generate images without restrictions.

- Works instantly: Zero setup, no Python installs, no GPU headaches. Just log in and start making AI art.

- More than just images: Create AI voices and song covers, generate AI videos, and even train your own custom models — all from a single platform.

- Pre-trained models at your fingertips: No need to fine-tune models from scratch — just pick a style and go.

- A built-in community: Share your cool images, discover trending models and pics, and see what others are making to get your inspo on.

If you’re deep into Stable Diffusion, keep tweaking and experimenting. But if you’d rather skill the hassle of becoming a negative prompt Stable Diffusion expert, try Weights for free.